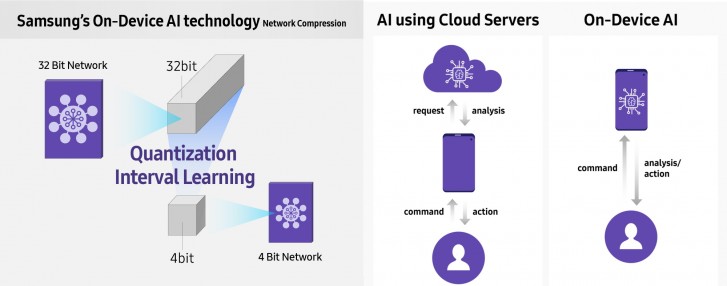

Samsung has announced a new NPU technology that will allow on-device AI to be faster, more energy efficient and take up less space on the chip. Thanks to Quantization Interval Learning, 4-bit neural networks can be created that retain the accuracy of a 32-bit network.

Using fewer bits significantly reduces the number computations and the hardware that carries them out – Samsung says it can achieve the same results 8x faster while reducing the number of transistors 40x to 120x.

This will make for NPUs that are faster and use less power, but can carry out familiar tasks such as object recognition and biometric authentication (fingerprint, iris and face recognition). More complicated tasks can be carried out too, all on the device itself.

On-device AI is a boon to privacy, of course, but it has other benefits over cloud-based AI too. For example, connecting to a cloud server means that the phone’s modem is using power. This connection over the Internet adds latency too.

Thanks to the high-speed, low-latency operation of QIL-based NPUs, Samsung foresees their use in things like self-driving cars (where accurate object recognition is key) and virtual reality too (perhaps something along the lines of Nvidia’s DLSS, which uses deep learning to improve image quality with little cost to the GPU).

Samsung will bring this technology to mobile chipsets “in the near future” as well as other applications (e.g. smart sensors).

from GSMArena.com - Latest articles https://ift.tt/30aUoGS

No comments:

Post a Comment